It is a very human instinct to trust somebody the better we know them. And how well we know somebody is often a result of the amount of time we spend with them. This level of trust can often equate to how much we are willing to believe, or be influenced by, them.

Influence comes from trust.

Interestingly, many people assign human attributes to not only other people but items as well, including pets, inanimate objects (“I stubbed my toe on that stupid rock”) and, most importantly, interactive systems (such as computers). In fact, regarding interactive systems, we are rapidly moving towards the point where a growing number of people assign the same level of trust to an online venue as they might a real life friend or family member. This seems especially true in the area of search and information distribution systems, that are by design “user” friendly (translate “more humanistic”).

Unfortunately, in a world where we are all “publishers” and sources of information, not all information has the same value or trustworthiness.

As a result, many people are exposing themselves to a veritable web of incorrect or manipulated information. To some extent this abdicates our very human instincts of questioning and common sense to information sources that are inherently unreliable, easily manipulated or just simply overwhelm us in terms of the sheer volume of information that we have to deal with on a daily basis. In fact, it seems many are lowering their friendship & trust requirements to meet the abilities of the Internet-based systems with which they interact on a daily basis.

Let me illustrate with a few examples:

Example 1: Google v Bing (Nicaragua v Costa Rica)

For over a century, Nicaragua and Costa Rica have argued over a relatively small, yet seemingly important, slice of land along their north-eastern border (a dispute that was supposedly settled diplomatically over a century ago with the aid of the U.S. government). This dispute, now in front of the Organization of American States (OAS), has been renewed, with Nicaragua reclaiming the land and deploying military troops (Costa Rica, by the way, has no military of its own). It has even become part of a controversy surrounding the “rumored” desire of Nicaragua, Venezuela and Iran to build an alternative to the Panama Canal (there’s a conspiracy to bite into!).

Google, with the unenviable (but self-allocated) task of tracking global borders, is at the center of this renewed dispute, having used an admittedly erroneous map which featured an incorrect/out-dated border. Nicaragua has been touting the incorrect Google Maps version (which was subsequently/conveniently used as part of their justification to move troops into Costa Rica) while Costa Rica has been touting the correct Microsoft BING version (yes, BING got it right). Interestingly, Google Maps has since corrected their border, which Nicaragua in turn has requested not be corrected.

For many, being right equates to being first, or more well known, on the Internet.

On it’s surface, this would seem like a trivial non-event, especially given the history of the world and the inability of anybody to accurately map the world (a task that is extremely difficult as borders are constantly changing and the world is full of “disputed” territories). But Google has made their share of mistakes, including recently deleting the entire town of Sunrise, Florida (and it’s 90,000 inhabitants). More importantly, had Nicaragua not taken action against Costa Rica, I doubt that no more than a handful of people in the entire world would have noticed the error, nor would anybody else (and I’ll include myself here) had any reason to question its accuracy.

Example 2: The Wikipedia Incident.

I have a good friend who recently recounted an amazingly absurd event involving Wikipedia: the crowd-sourced online encyclopedia that has increasingly become an acceptable source of “it must be true if it is on Wikipedia” information. My friend is an expert in a certain field and happened to discover an error involving a certain event in early American history. Being the helpful guy that he is, he went through the Wikipedia registration process and corrected what was a fairly obvious error. However, the following day he discovered that his correction had been subjected to a classic “undo” and reverted back to the original inaccurate text.

I have a good friend who recently recounted an amazingly absurd event involving Wikipedia: the crowd-sourced online encyclopedia that has increasingly become an acceptable source of “it must be true if it is on Wikipedia” information. My friend is an expert in a certain field and happened to discover an error involving a certain event in early American history. Being the helpful guy that he is, he went through the Wikipedia registration process and corrected what was a fairly obvious error. However, the following day he discovered that his correction had been subjected to a classic “undo” and reverted back to the original inaccurate text.

Influence or control don’t always equate to experience.

After several “re-corrections” and a series of similar “undo” events, he finally tracked down the person that was rejecting his corrections – a person who apparently had a bit more Wiki-clout than my friend. After explaining that the information on Wikipedia was incorrect, and citing numerous written texts and documents as proof, his corrections were clearly and decisively rejected – the justification essentially being that the original text was corraborated by another Wikipedia entry (which itself was not only incorrect but used the original incorrect entry in a circular reference between the two entries, demonstrating that two Wiki-wrongs do in fact make a Wiki-right). So much for crowd-sourced intelligence when the crowd you are dealing with is a crowd of one.

Example 3: The $200M/Day Obama trip to India

This one simply defies logic. An official in the Maharashtra Government (a state in western India) is referenced by a story on NDTV.com which stated “A top official of the Maharashtra government privy to the arrangements for the high-profile visit has reckoned that a whopping $200 million per day would be spent by various teams coming from the US in connection with Obama’s two-day stay in the city.” This included, by the way, the erroneous renting out of the entire Taj Mahal Hotel in Mumbai and covering the costs of a (massively overstated) 3,000 person entourage (including the press, which works on their dime, not the U.S. government’s checking account).

This “creative” estimate from the “privy” official, as well as a subsequent NDTV.com report that 34 U.S. warships (including an aircraft carrier) were being diverted to support the President’s visit to India were picked up by the Drudge Report and subsequently became fact amongst the anti-Obama press in the U.S. By this time, a mere hour, of course, the $200M/day for the two days in India had become $2Billion: $200M/day for not just India but for the entire 10 day Asia trip (side note: we only spend $190M/day on our entire war effort in Afghanistan – see this nice rebuke by the WSJ.com). Despite a dubious source, and a series of incorrect enhancements courtesy of the instant Internet, the story gained traction – significant traction well beyond what normal common sense would dictate.

This “creative” estimate from the “privy” official, as well as a subsequent NDTV.com report that 34 U.S. warships (including an aircraft carrier) were being diverted to support the President’s visit to India were picked up by the Drudge Report and subsequently became fact amongst the anti-Obama press in the U.S. By this time, a mere hour, of course, the $200M/day for the two days in India had become $2Billion: $200M/day for not just India but for the entire 10 day Asia trip (side note: we only spend $190M/day on our entire war effort in Afghanistan – see this nice rebuke by the WSJ.com). Despite a dubious source, and a series of incorrect enhancements courtesy of the instant Internet, the story gained traction – significant traction well beyond what normal common sense would dictate.

Where has our common sense gone?

There was a time when both our individual and collective common sense would have immediately questioned each of the three examples above. But increasingly that is no longer the case. We have replaced questioning of sources and common sense with a misplaced sense of trust to an often automated (or highly manipulated) information stream.

If we interact with an information system enough, we learn to trust it. This is not always a good thing.

We now live in a world where search engines (knowing that we’re more likely to click on search results at the top of the list) allow the manipulation of search results. We live in a world where not only can you sponsor Tweets on Twitter, but you can now sponsor Twitter Trends (talk about the ultimate ability to take advantage of “what’s hot now”).

Is there anything left that we can trust? Yes. Our common sense. And that is our challenge – to not only make sure that we ourselves question and fact-check what we see, read or hear (in any information/news medium), but to be a leader, help encourage, influence and empower others to do the same.

If we can do that, then perhaps we have a chance to restore some sanity and faith to this digital world in which we all live.

Note: Map Graphic courtesy of Erika Orban, Wikipedia image courtesy of Wikipedia, Barack Obama image source unknown

Last week I participated in an interesting discussion regarding influence and the role of analyst relations (AR) – specifically around the issue of how AR staff could increase their influence through a variety of different mechanisms or channels. But one key point that kept creeping into the conversation was one of limited resources: “we simply don’t have the staff to aggressively pursue everything that we would like to accomplish” (a point echoed by many in smaller or fast-growing firms).

Last week I participated in an interesting discussion regarding influence and the role of analyst relations (AR) – specifically around the issue of how AR staff could increase their influence through a variety of different mechanisms or channels. But one key point that kept creeping into the conversation was one of limited resources: “we simply don’t have the staff to aggressively pursue everything that we would like to accomplish” (a point echoed by many in smaller or fast-growing firms).

My first true experience in the world of B2B marketing was in 1990. My task was simple: market one of our new products to company “X”. My task could not have been simpler – while “X” was not a current customer/partner, they were very well known for purchasing our type of product, which was then integrated and resold as part of their own, much larger, product line.

My first true experience in the world of B2B marketing was in 1990. My task was simple: market one of our new products to company “X”. My task could not have been simpler – while “X” was not a current customer/partner, they were very well known for purchasing our type of product, which was then integrated and resold as part of their own, much larger, product line.

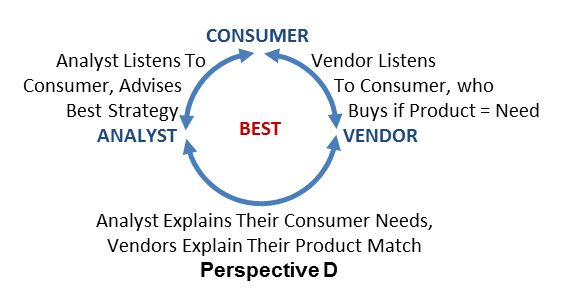

In this way, the best possible product offerings can be designed and deployed, giving each of the three participants what they need – the best value for their dollar spent. Note too that in Perspective D I have placed the Consumer at the top of the circle, since they ultimately control what is purchased, and their needs and requirements should be what ultimately influences the market.

In this way, the best possible product offerings can be designed and deployed, giving each of the three participants what they need – the best value for their dollar spent. Note too that in Perspective D I have placed the Consumer at the top of the circle, since they ultimately control what is purchased, and their needs and requirements should be what ultimately influences the market.